TensorFlow ABC

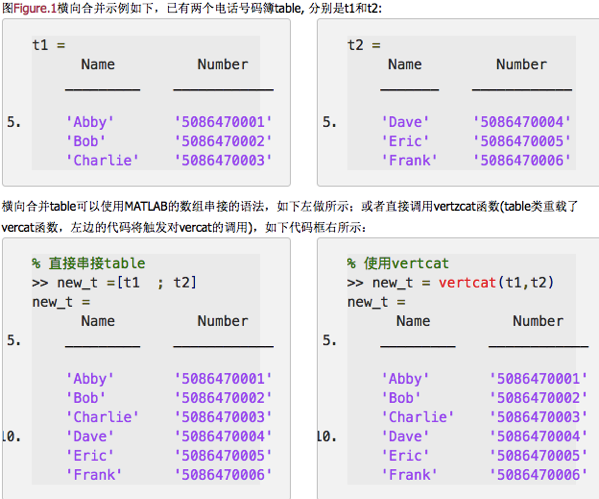

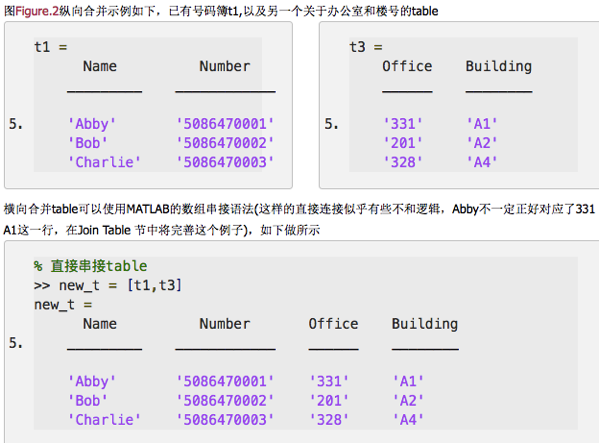

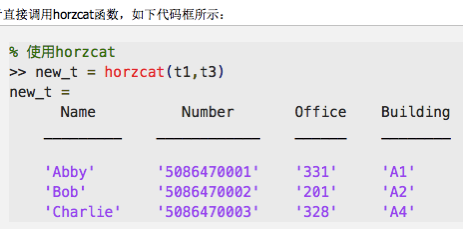

by allenlu2007

Reference: http://www.learningtensorflow.com/

Reference: http://learningtensorflow.com/lesson4/

Reference: https://deeplearning4j.org/compare-dl4j-torch7-pylearn

最早 study computer vision (CV) 是用 OpenCV (C/C++ core and Python interface), 主要是傳統的算法為主(比較少 deep learning 的結構和算法)。OpenCV 是結果導向。對於 debug and visualization input/output/intermediate results 沒太大幫助。

再來是 Caffe/Torch/Theano/.. 等各大學的 free software. 最常用的是 Caffe (Python interface). 對於 computer vision, 特別是 deep learning 的推廣有很大的作用。Caffe 的限制是只用在 computer vision or machine vision 應用。不適合用在 text, sound, time series 的應用 (e.g. reinforce learning?) Caffe2 improve this?

Why TensorFlow?

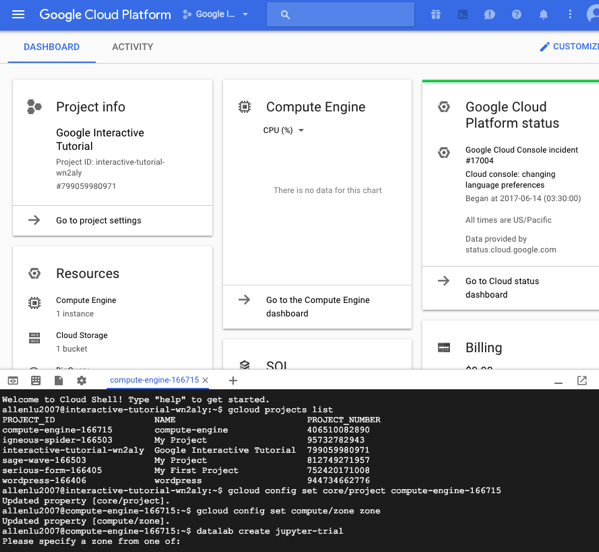

再來是 Google 提供的 TensorFlow (Python API over C/C++ engine), 繼承 Theano 的優點的下一代 (Theano creator 加入 Google). 雖然 TensorFlow 和 Caffe or Theano 能做的事差別不大 (Alexnet/Googlenet/… etc.). 但 Google TensorFlow 至少有兩大優點 (1) 提供 visualization tool (Tensor Board) 對於 debug 和理解 network behavior 非常重要。(2) 提供 google cloud platform (GCP) with built-in tensorflow software and tools, 可以做 large scale training and testing. 同樣非常重要。

最近 Matlab (2017a) 開始醒悟追趕。Matlab 的強處是既有的算法和 toolbox (e.g. signal processing, control, etc.), visualization tool. 另外強調 parameter optimisation and code generation for embedded applications. 有機會成為後起之秀。不過無法和雲結合。

What is TensorFlow?

首先 TensorFlow 的名稱原由: Tensor 來自微分幾何。就是 multi-dimensional array (independent of coordinate system). Tensor 一般是用來表示 (represent) multi-dimensional features.

Flow 顧名思義來自 graph theory (e.g. probabilistic graph theory) node-to-node 之間的 flow in tensor form and operation (MAC, activation, pooling, etc.).

TensorFlow 主要是用 directed graph 建構複雜的 (deep) learning network, 如 multi-layer convolution neural network (Alexnet, GoogleNet, ResNet, etc.), 或是 long-short term memory, LSTM RNN, 或是更複雜的 network.

Graph and Session

TensorFlow 在執行時,先建構 graph 再執行。

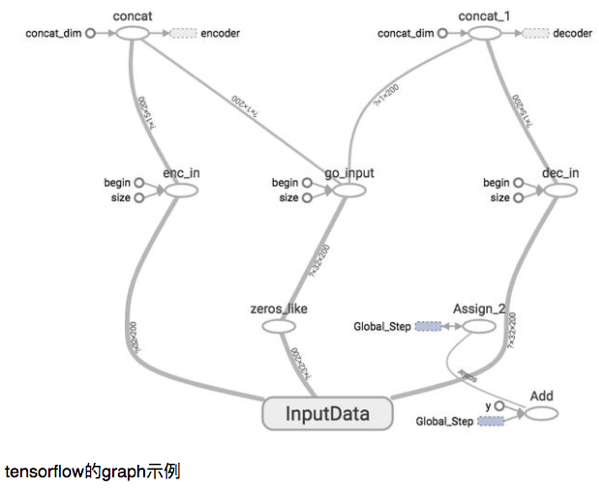

以下是 TensorFlow 執行的 graph 圖示。(Graph) node 代表 data operation, link 代表是 tensor flow.

注意 Graph 不是一個 graph object, 而是每一個 node 都是一個 graph object. 因此一個 session 包含多個 graph objects.

Session 則是一個 session.

Seesion 有兩種模式。batch mode or interactive mode 如下。

sess = tf.Session()

sess = tf.InteractiveSession()

在 batch mode 時,Session starts with sess = tf.Session(), 接著再 sess.run().

在 interactive mode 時,Session starts with sess = tf.InteractiveSession(). 再來可以用 Tensor.eval() 和 Operation.run() 得到結果。 這非常有用, 例如 accuracy.eval(), cross_entropy.eval() or sess.run(), op.run().

Variable, Constant, Placeholder

Constant, Variable, Placeholder 就是 (variable) tensor, 基本包含 features (input, intermediate, output, label) and weights (or filter). Constant and variable 不用多說,但在 TensorFlow 是 tensor form (multi-dimensional array). 這和 matlab 的 variable default 都是 array 一樣。

比較特別的是 placeholder. 其實就是 (tensor) variable. 從 graph 的角度,似乎就是 graph 的 inputs. 以下是 reference2 的說明。

So far we have used Variables to manage our data, but there is a more basic structure, the placeholder. A placeholder is simply a variable that we will assign data to at a later date. It allows us to create our operations and build our computation graph, without needing the data. In TensorFlowterminology, we then feed data into the graph through these placeholders.

Why Placeholder?

為什麼需要 placeholder? 我在看到這篇文章才了解。https://read01.com/AM4BQy.html 直接摘錄:

事實上,Theano也好,Tensorflow也好,其實是一款符號主義的計算框架,未必是專為深度學習設計的。假如你有一個與深度學習完全無關的計算任務想運行在GPU上,你完全可以通過Theano/Tensorflow編寫和運行。

這裡說的符號主義,指的是使用符號式編程的一種方法。 另一種相對的方法是命令式編程。

假如我們要求兩個數a和b的和,通常只要把值賦值給a和b,然後計算a+b就可以了,正常人類都是這麼寫的 (命令式編程):

a=3

b=5

z = a + b

運行到第一行,a真的是3.運行到第2行,b真的是5,然後運行第三行,電腦真的把a和b的值加起來賦給z了。

一點兒都不神奇。

但總有不正常的,不正常的會這麼想問題:a+b這個計算任務,可以分為三步。(1)聲明兩個變量a,b。建立輸出變量z(2)確立a,b和z的計算關係,z=a+b(3)將兩個數值a和b賦值到變量中,計算結果z

後面那種「先確定符號以及符號之間的計算關係,然後才放數據進去計算」的辦法,就是符號式編程。當你聲明a和b時,它們裡面是空的。當你確立z=a+b的計算關係時,a,b和z仍然是空的,只有當你真的把數據放入a和b了,程序才開始做計算。

符號之間的運算關係,就稱為運算圖。

這樣做當然不是閒的無聊,符號式計算的一大優點是,當確立了輸入和輸出的計算關係後,在進行運算前我們可以對這種運算關係進行自動化簡,從而減少計算量,提高計算速度。另一個優勢是,運算圖一旦確定,整個計算過程就都清楚了,可以用內存復用的方式減少程序占用的內存。

Placeholder 就是暫存 a and b, 為了之後賦值!

以最簡單的 softmax 而言如下:

tf.reset_default_graph()

# graph.version starts with 0

x=tf.placeholder(tf.float32,[None,784],name="x-in")

y_=tf.placeholder(tf.float32,[None,10],name="y-in")

# the following is softmax

W = tf.Variable(tf.zeros([784, 10])) b = tf.Variable(tf.zeros([10])) y = tf.nn.softmax(tf.matmul(x, W) + b)

x (input) and y_ (ground truth label) 是 placeholders. W, b, y 都是 variables.

什麼時候 placeholder 會得到 input data? 就是在 session run 時候。同時用 feed_dict (feed dictionary) 傳送.

以下是一個簡單的例子。

Session with placeholder and feed_dict (1-D and 2-D array)

import tensorflow as tf

x = tf.placeholder("float", None)

y = x * 2

with tf.Session() as session:

result = session.run(y, feed_dict={x: [1, 2, 3]})

print(result)

import tensorflow as tf

x = tf.placeholder("float", [None, 3])

y = x * 2

with tf.Session() as session:

x_data = [[1, 2, 3],

[4, 5, 6],]

result = session.run(y, feed_dict={x: x_data})

print(result)

Session with variables, 多一步 tf.global_variables_initializer()

import tensorflow as tf

x = tf.constant([35, 40, 45], name='x')

y = tf.Variable(x + 5, name='y')

model = tf.global_variables_initializer()

with tf.Session() as session:

session.run(model)

print(session.run(y))

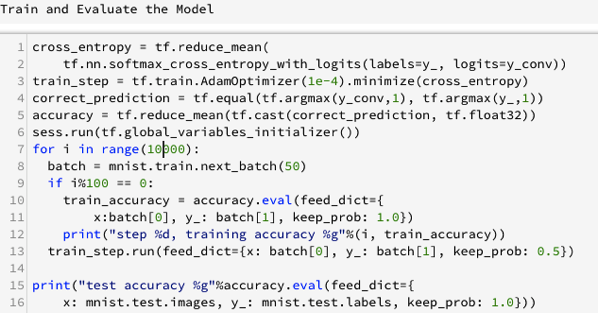

Loss function and Optimiser (in Training)

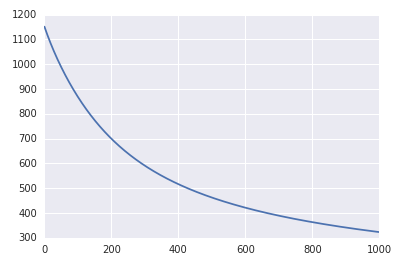

Machine learning or deep learning 基本就是 optimisation 問題。需要提供 loss function and evoke optimiser.

方式如下:train_step.run

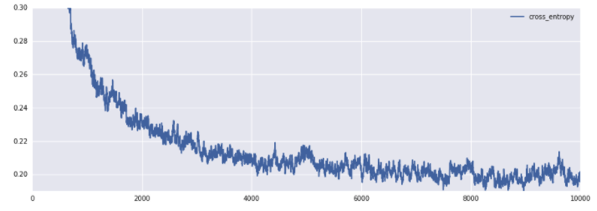

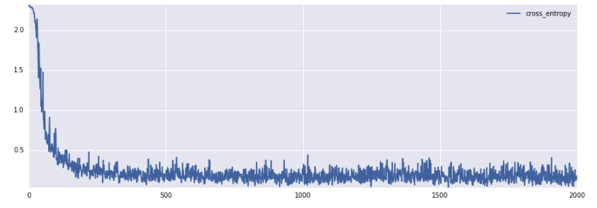

Softmax 的 loss function: cross-entropy; Optimiser: batch stochastic gradient descent.

cross_entropy = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(labels=y_, logits=y)) train_step = tf.train.GradientDescentOptimizer(0.5).minimize(cross_entropy) for _ in range(1000): batch = mnist.train.next_batch(100) train_step.run(feed_dict={x: batch[0], y_: batch[1]})

另一種方法用 sess.run

for i in range(n_train): batch_xs, batch_ys = mnist.train.next_batch(batchSize) sess.run(train_step, feed_dict={x:batch_xs, y_:batch_ys, keep_prob:1.0})

Evaluation (in both Training and Testing)

Evaluation 有兩個用途:一是在 training 時用來 monitor training (classification) error (不是來自 loss function)。一是找最後的 testing classification error.

correct_prediction = tf.equal(tf.argmax(y,1), tf.argmax(y_,1)) accuracy = tf.reduce_mean(tf.cast(correct_prediction, "float"))

for i in range(n_train): batch_xs, batch_ys = mnist.train.next_batch(batchSize) sess.run(train_step, feed_dict={x:batch_xs, y_:batch_ys, keep_prob:1.0})

testAccuracy = sess.run(accuracy, feed_dict={x:mnist.test.images,y_:mnist.test.labels, keep_prob:1.0})

or 另一方式:

correct_prediction = tf.equal(tf.argmax(y,1), tf.argmax(y_,1))

accuracy=tf.reduce_mean(tf.cast(correct_prediction,tf.float32))

accuracy.eval(feed_dict={x:mnist.test.images,y_:mnist.test.labels})