Kalman filter and smoother 和 HMM filter 的異同

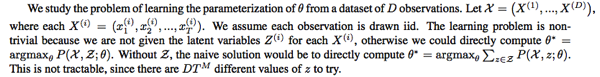

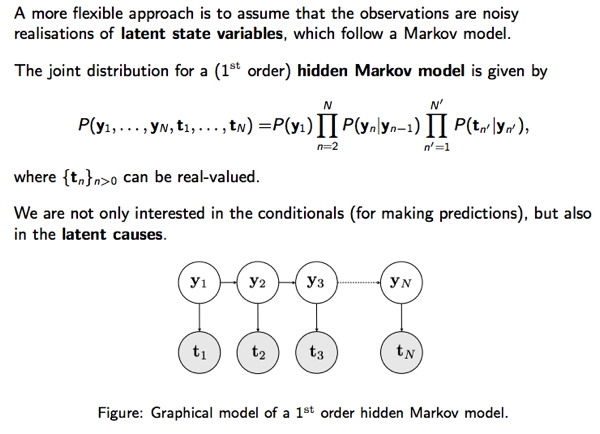

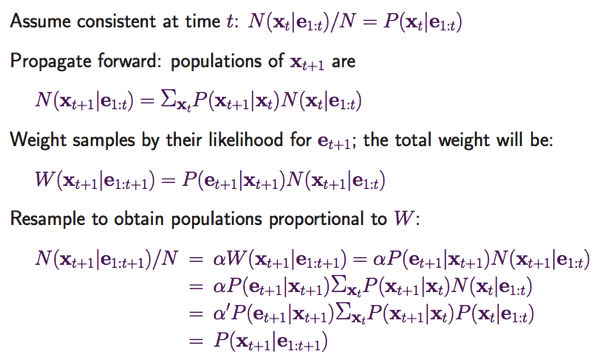

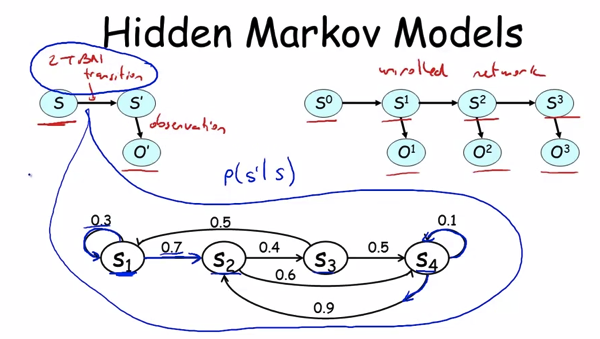

HMM or Baysian filter:

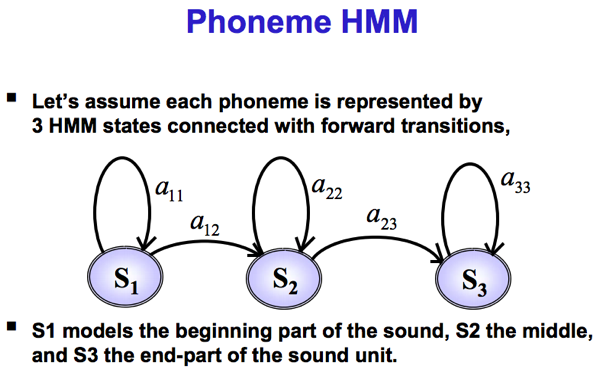

1. discrete state

2. probabilistic model

3. transition probability and emission probability matrix

4. PMF; no assumption of Gaussian or linear what so ever

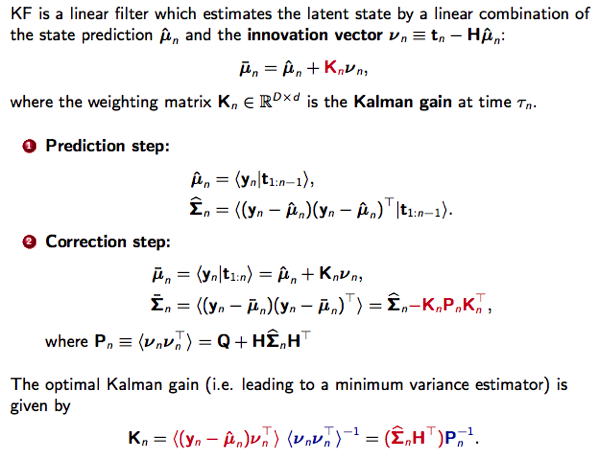

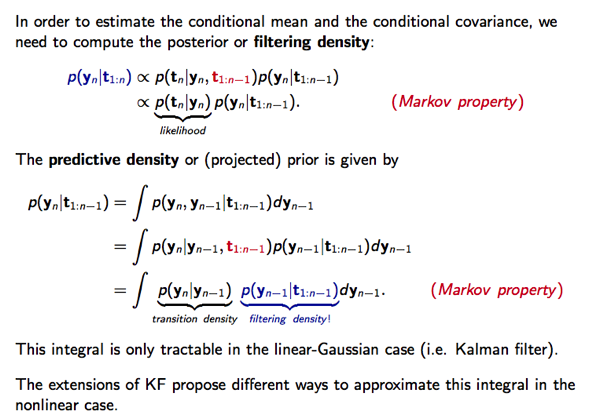

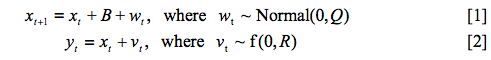

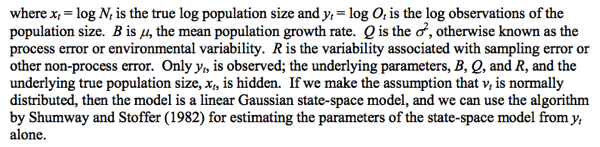

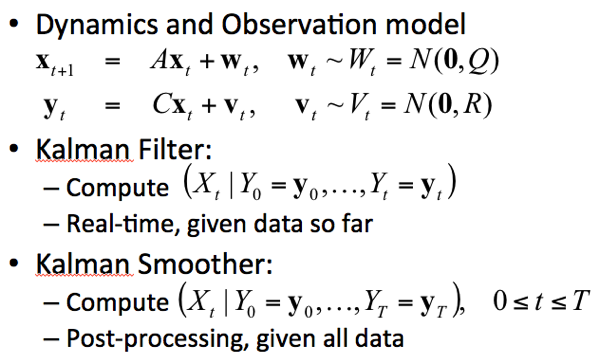

KF is a special case of HMM

1. continuous state (and infinite states)!

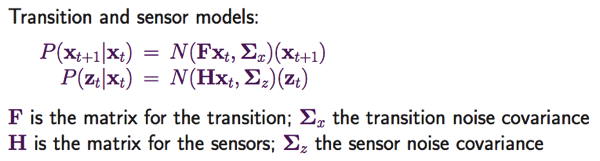

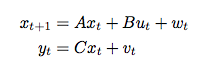

2. state space model

2. linear state space model!! Very strong assumption!

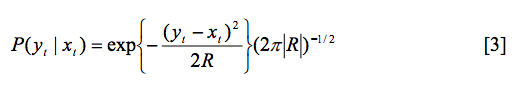

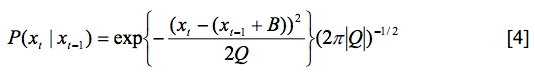

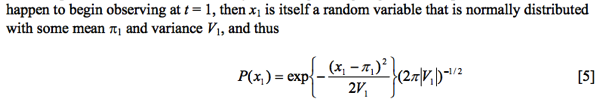

3. Transition pdf and emission pdf are all Gaussian. Therefore, everything is Gaussian

State, transition, and emission pdf are Gaussian PDF

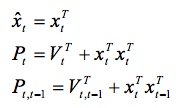

Therefore, the estimate state = mean of the PDF, and covariance matrix of the PDF

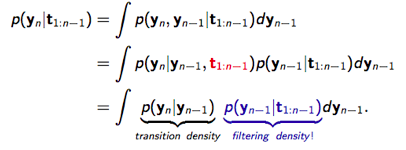

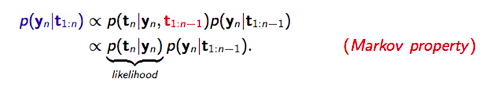

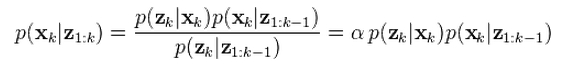

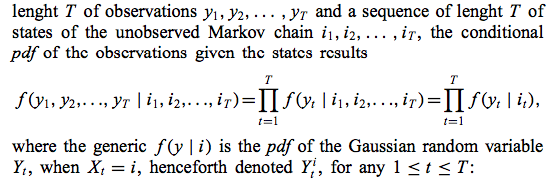

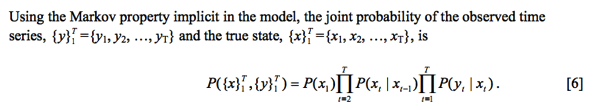

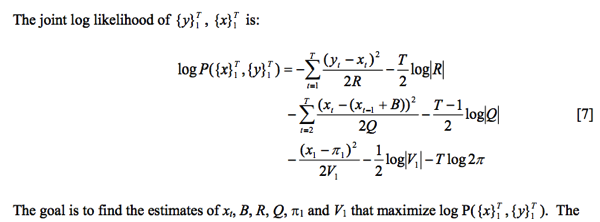

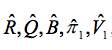

細節可以參考 Hidden Markov Model of Filtering, Smoothing.

Kalman filter and smoother 和 HMM Viterbi algorithm 的不同

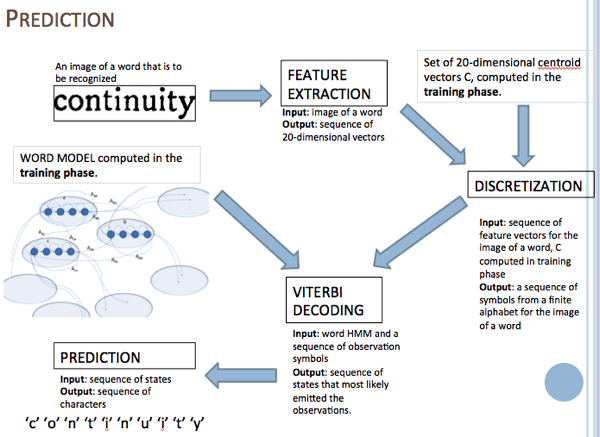

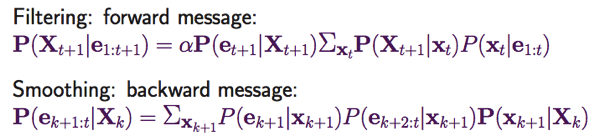

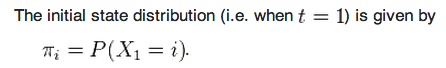

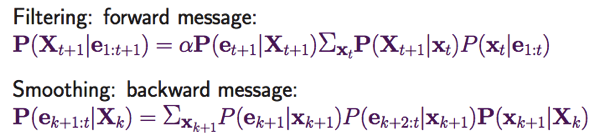

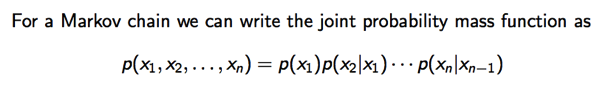

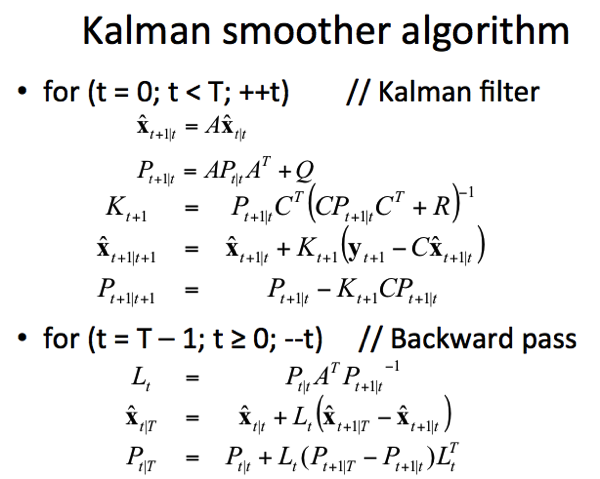

Viterbi 是假設 transition and emission probabilities 為已知,根據觀察到的 data 決定最有可能的 state sequence, i.e. P(x1, x2, .., xk | y1, y2, … yk)

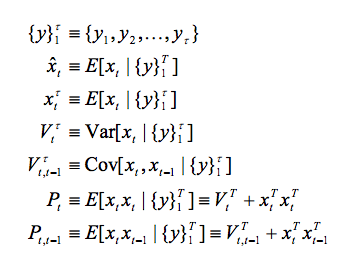

Kalman filter/smoother 是假設 transition and emission pdf (Gaussian) 為已知,根據觀察到的 data 決定最有可能 state conditional probability P(xk | y1, y2, .. yk or yn) at k-th moment (point-wise)! yk is Kalman filter, yn is Kalman smoother.

HMM 是否可以有 point-wise 的 estimation like Kalman filter. 答案是 yes. 但是 HMM with finite state 和 linear space 一個很不同的特性是有些 transition 是禁止的 (probability = 0,想想 convolution code or trellis code modulation). 如果只做 point-wise estimation, 最後可能得到錯誤的 transition (就 overall sequence probability = 0)! 可以參考本文 for more information of Viterbi algorithm.

Kalman filter and Convolution Code and HMM 比較

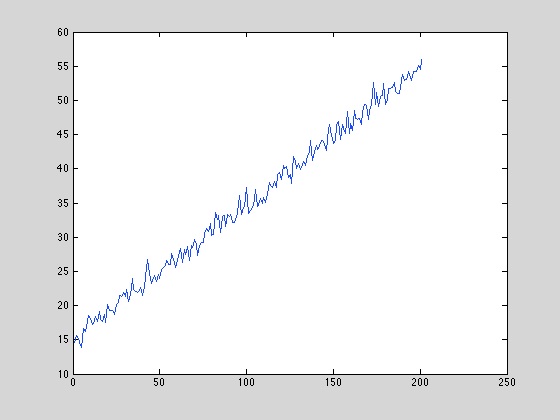

Kalman filter: linear space model -> state is continuous Gaussian PDF -> observed data is continuous Gaussian PDF

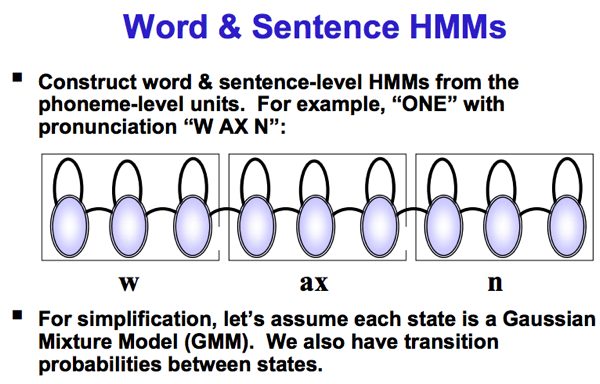

HMM: state is discrete (finite or infinite) -> transition probability matrix and emission probability matrix

Convolution code or Trellis code: state is finite discrete -> transition probability matrix is known. observed data is continuous Gaussian PDF

Therefore, Kalman filter is applied to linear space model, ML on the point-wise of given data (Kalman filter/smoother)

HMM and Convolution code/Trellis code decode with known transition probability and emission probability matrix/Gaussian PDF, ML on the sequence of given data using Viterbi algorithm.

The Convolution code/Trellis code decoding has two flavors: one is called hard decision, quantized the received data to the finite output states like HMM. Or soft decision, no quantization. The soft decision performance is better than hard decision because it keeps more information.

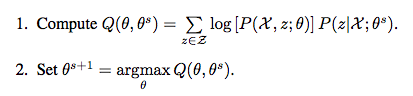

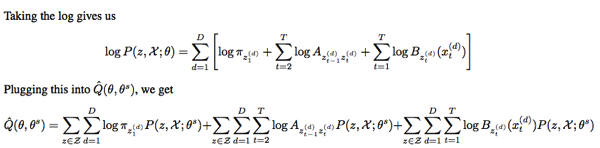

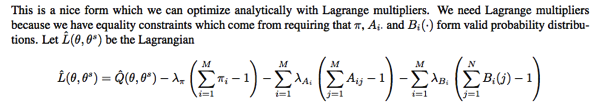

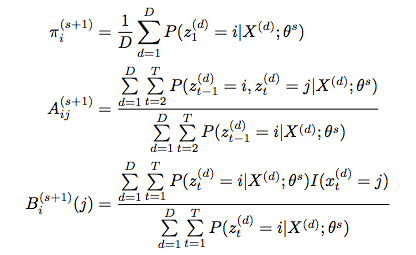

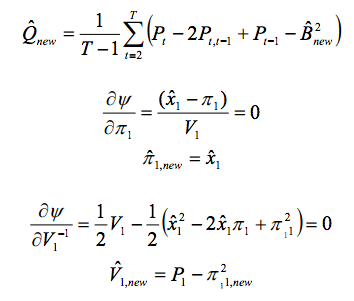

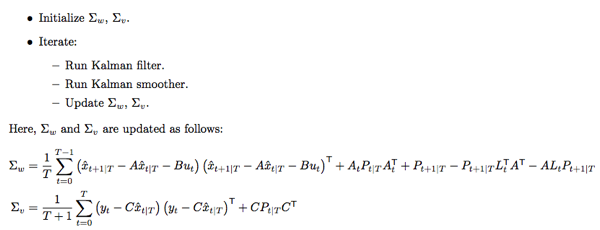

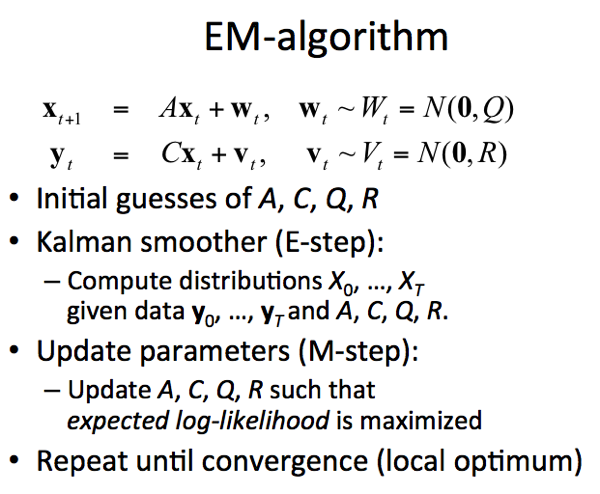

Kalman filter EM algorithm 和 HMM Baum-Welch algorithm 異同

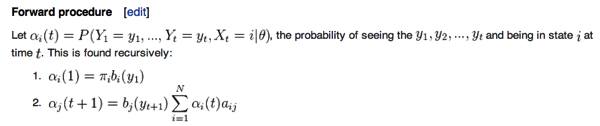

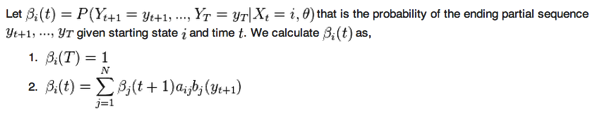

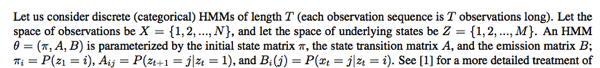

Baum-Welch algorithm 是用在 HMM, 基本上和 EM algorithm used for Kalman filter 是類似的 algorithm.

Baum-Welch algorithm in HMM 相當於 EM algorithm in Kalman filter. Unknowns 有些不同,forward and backward pass 相同。

HMM Baum-Welch algorithm 和 HMM Viterbi algorithm 的不同

簡單來說,Viterbi algorithm 是假設 transition and emission probabilities 為已知,根據觀察到的 data 決定最有可能 的 state sequence.

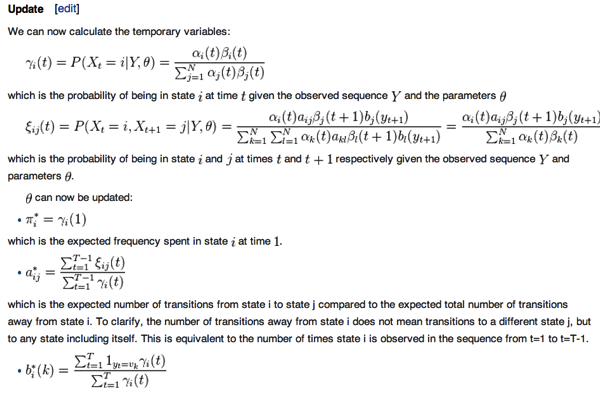

Baum-Welch algorithm 則是 transition and emission probabilities 為未知,根擄觀察的 data 決定最有可能的 transition, emission probability, and initial state probability distribution.

The Baum-Welch algorithm and the Viterbi algorithm calculate different things.

If you know the transition probabilities for the hidden part of your model, and the emission probabilities for the visible outputs of your model, then the Viterbi algorithm gives you the most likely complete sequence of hidden states conditional on both your outputs and your model specification.

The Baum-Welch algorithm gives you both the mostl likely hidden transition probabilities as well as the most likely set of emission probabilities given only the observed states of the model (and, usually, an upper bound on the number of hidden states). You also get the “pointwise” highest likelihood points in the hidden states, which is often slightly different from the single hidden sequence that is overall most likely.

If you know your model and just want the latent states, then there is no reason to use the Baum-Welch algorithm. If you don’t know your model, then you can’t be using the Viterbi algorithm.

Edited to add: See Peter Smit’s comment; there’s some overlap/vagueness in nomenclature. Some poking around led me to a chapter by Luis Javier Rodrıguez and Ines Torres in “Pattern Recognition and Image Analysis” (ISBN 978-3-540-40217-6, pp 845-857) which discusses the speed versus accuracy trade-offs of the two algorithms.

Briefly, the Baum-Welch algorithm is essentially the Expectation-Maximization algorithm applied to a HMM; as a strict EM-type algorithm you’re guaranteed to converge to at least a local maximum, and so for unimodal problems find the MLE. It requires two passes over your data for each step, though, and the complexity gets very big in the length of the data and number of training samples. However you do end up with the full conditional likelihood for your hidden parameters.

The Viterbi training algorithm (as opposed to the “Viterbi algorithm”) approximates the MLE to achieve a gain in speed at the cost of accuracy. It segments the data and then applies the Viterbi algorithm (as I understood it) to get the most likely state sequence in the segment, then uses that most likely state sequence to re-estimate the hidden parameters. This, unlike the Baum-Welch algorithm, doesn’t give the full conditional likelihood of the hidden parameters, and so ends up reducing the accuracy while saving significant (the chapter reports 1 to 2 orders of magnitude) computational time.

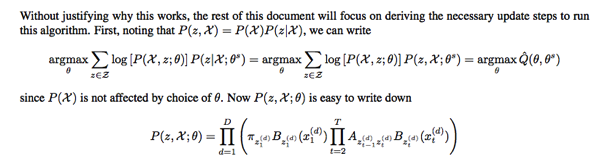

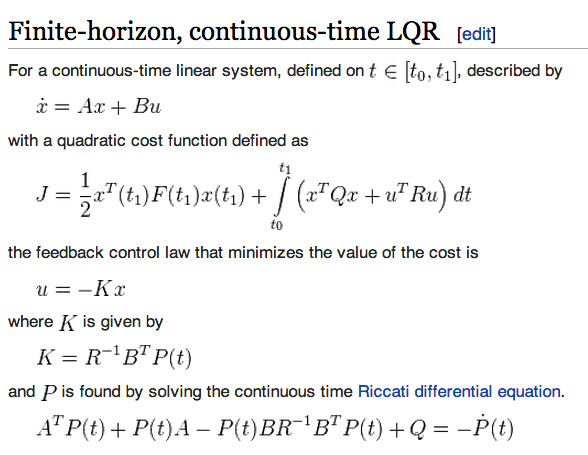

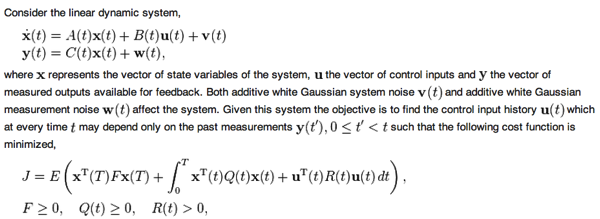

LQR 和 LQG 的差異

LQG = LQR (control policy) + Kalman filter (LQE state estimation with noisy observables)

Separation principle applies to LQR (control) and Kalman filter (state estimation)

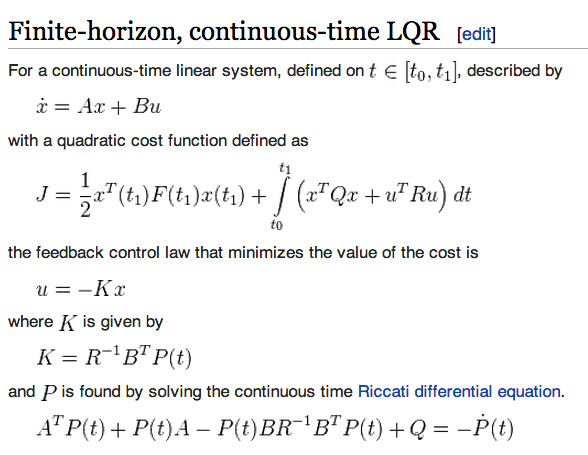

LQR 如下:

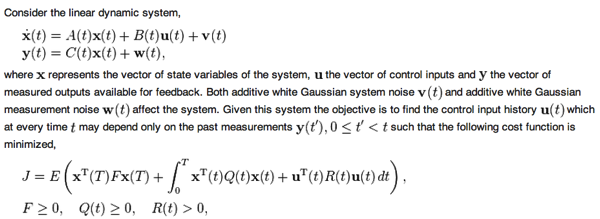

LQG 如下:

The LQG controller is simply the combination of a Kalman filter i.e. a linear-quadratic estimator (LQE) with a linear-quadratic regulator (LQR).

Markov Decision Policy and LQR 異同

Are they the same things? It is similar to HMM vs. Kalman filter

LQR

1. continuous states

2. linear space model

3. Gaussian

Q&A

HMM state is discrete of the PMF -> not significant, 應該是 transition/emission probability matrix PMF 對應 transition/emission Gaussian PDF.

HMM uses Viterbi algorithm to determine the best state like Kalman filter? No -> Viterbi is the maximum likelihood of state sequence estimator of P(x1, x2, .. xk | y1, y2, .. yk). Kalman filter is point-like maximum likelihood state estimator P(xk | y1, .. yk).

Determined by the policy function? No -> 完全沒關係